Data Exploration by Sonification

See www.sonification.de for more up-to-date information

The way that we understand data is determined by the way we access data. As most data presentation techniques up to now are based on data visualization, researchers strongly developed an visual imagination of data spaces.

However, there are alternatives to visual data presentation, which might be superior for certain types of data. One of them is acoustic data presentation, or Sonification. Sonification is a new emerging field in HCI research, which closes a gap between the usually complex visual computer displays and the very poor acoustic interface to the user.

Why to use other senses than vision?

Why to use other senses than vision?

We are capable in discerning subtle changes in acoustic patterns which is shown to a high degree by the medicine who routinely applies acoustic data analysis when applying the stethoscope to listen to breath noise and heart tones. We think there is a high potential in making use of acoustic data presentation to understand data in new ways which may accelerate, simplify and support the data mining process.

Just as a car mechanic listens to the sound of an engine to get information about causes of malfunction and hints what to do, the data miner may listen to the data and get cues what structures are found in the data: Sonification may give information about the clustering of data, the local intrinsic data dimensionality, class overlap, topological structures, etc.

Besides that, there are other interesting applications of sonification: e.g. it just can be applied to monitor the state of a learning neural network, or to monitor large amounts of data like longtime-EEG's.

In classification tasks, where a human expert evaluates the data, it can accelerate classification and improve performance.

Some Sonification Types

Some Sonification Types

-

Alarm signals

The first applications of acoustic signals in man computer interfaces

have been alarm signals. The signal reaches the user even if (s)he is occupied

with other things. The simplest data sonification is done in a regular

PC speaker: the BIOS announces the state of the hardware acoustically.

Acoustic signals and their efficiency for alarm purposes have been

studied extensively for cockpit applications. These kind of acoustic signal

can even be utilized in data mining, e.g. to give navigational

cues during inspection of data spaces . They are rather poor for

complete presentation of complex data.

-

Audification

This method translates the data itself to amplitude values of the waveform.

It is applicable if the data itself is a time series, e.g. data from a

dynamic system like neural networks or seismic data. This sonification

and related forms are quite useful to monitor large data sets in strongly

compressed time. Tools to scale the pitch or strech the time scale

without affecting the pitch (a kind of acoustic microscope) are useful

tools to enhance this form of sonification.

-

Earcons

For navigation/orientation in data trees (like directory trees) and

to communicate more complex messages Earcons have been proposed by Blattner

et. al. Earcons are simple tonal combinations or arbitrary acoustic patterns

whose meaning must be learned by the user, and which can be combined to

build non-verbal messages of a higher complexity. The disadvantage is the

learning effort and the limited range of application fields.

-

Auditory Icons

This sonification uses sounds that are used in a metaphorical sense,

so that the effort to learn the display is decreased. E.g. filling a bottle

with water produces a commonly known sound evolution, which might be applied

to a 'state of download - meter' sonification. A lot of research in the

field of sonification focusses on how to find an select suited sounds for

a GUI. However, this display is rather unsuited for presentation of general

types of data.

-

Parameter Mapping

This sonification is a kind of sonic scatter plot. For each data record

an acoustic event is generated, whose properties are driven by the data

values (which are mapped to the sound attributes). So the columns of a

data set may be mapped to the pitch, the duration, volume, the onset of

the tone, vibrato strength, vibrato speed, brightness, spectral evolution

of the sound, roughness, the envelope of the sound, etc. The high number

of different attributes allow to design a high dimensional acoustic display.

Problems with Parameter Mapping

Parameter Mapping is the richest method for presentation of high dimensional

data, but it has a couple of problems: the sonification is not invariant

to spatial transformations, there is the need to assign a lot of attributes,

which makes every sonification sound different. Interpretation is difficult,

as the attributes have always to be looked up. Furthermore, the dimensionality

is limited to the number of instrument parameters.

To overcome some of these problems a new approach was seeked and found with

Model-Based Sonification

Model-Based Sonification

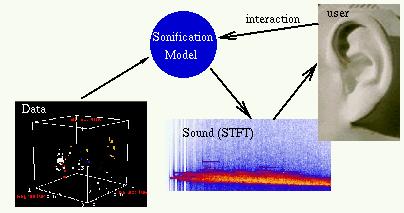

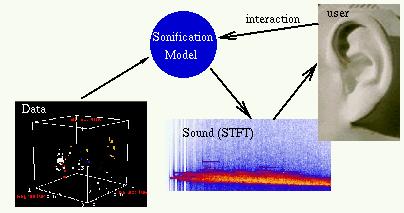

Here, the idea is to take the way that we use sound in our natural environment

as a model for the usage in data sonification. Our acoustic senses are

optimized to listen to natural sounds and are furthermore specialized to

draw information from it. So, the idea is to build a sonification interface,

in which the sound is produced similar to the physical model and

where the modes of interaction with the data takes the usual proceeding

in inspecting objects into account.

Normally, real world objects are silent in a state of equibrilium.

They produce sound when they are excited (struck, hit) Analogous, the data

only produces sound if its excited by the user. The excitation happens

in a visual interface. So to say, a artificial ,,dynamic data instrument``

is determined by the model and is played by the user.

To determine a model based sonification, some things have to be determined:

- the system set up - what are the degrees of freedom

- the virtual physics - how are the d.o.f.'s coupled

- the acoustic observables - how do the d.o.f.'s contribute to an acoustic

signal

- the modes of interaction - how might the user excite the system

- the listener attributes - how do acoustic waves propagate to the user and

user location

Here are some advantages of this approach:

- Task-oriented model design: the model may be designed to yield large

perceptual differences if a task-relevant property is evident. So it is

possible to build an acoustic toolbox for data exploration.

- Interpretability: knowing the model, the interpretation of

the sonification is simplified.

- Number of Parameters: there are only few parameters, which are given

in the model definition. They are easier to control and their meaning is

intuitively understood.

- The sound is more natural, as it emerges from a dynamic process,

similar to realworld sounds. Thus the interface may be more pleasing.

Example: Data Sonograms

Example: Data Sonograms

Here analougous to real sonograms, a shock wave is introduced to a fixed position in data space. The shock wave expands spherically in the data space, exciting all data points to a vibrational motion around their position if reached by the shock wave. The motion of the data points is determined by the local potential, the local friction forces. These properties are given by local attributes of the data, e.g. the local probability density and the local class entropy.

By listening to data sonograms, it can be perceived if distinct classes

pentrate or if they are separated.

It may be perceived how much data contributes to a class. Furthermore,

it may be perceived if a class has outliers.

An advantage is, that listening to sonifications does not interrupt

the visual display. It might become an important interface in highly visual

demanding tasks (e.g. surgery tasks).

Current Research Projects

Current Research Projects

The current work consists in development of new sonification strategies for effective, easy-to-learn, easy-to-use acoustic data mining tools. Making our listening capabilities usable should extend visual displays rather than replace them.

Sonification is a strongly interdisciplinary field of research. Research of acoustic perception, cognition, sound metrics, sound representation, semantics of sound, efficient sound synthesis (e.g. with physical models), technical acoustics, psychoacoustics contribute to the design of sonification tools.

Some research projects are presented in greater detail on the project pages:

Literature

- [1] G. Kramer, Auditory Display - Sonification, Audification, and Auditory

Interfaces, Proceedings Volume, Addison Wesley, 1994

- [2] T. Hermann and H. Ritter, Listen to your Data: Model-Based Sonification

for Data Analysis, Proc. of the ISIMADE '99, Baden-Baden, Germany, 1999

Contact

Thomas Hermann: thermann(at)techfak.uni-bielefeld.de

Last modified: Tue 07-14-1999

Why to use other senses than vision?

Why to use other senses than vision? Why to use other senses than vision?

Why to use other senses than vision? Some Sonification Types

Some Sonification Types Model-Based Sonification

Model-Based Sonification

Example: Data Sonograms

Example: Data Sonograms Current Research Projects

Current Research Projects