Intelligent Systems Lab Project: AUDEYE - Audible Eyed

Participants

- Thoren Huppke

- Evangelos Mitsis

- Atif Mohd

- Christian Witte

- Antonios Zaroulas

Supervisors

- Dr. Kai Essig

- Dr. Thies Pfeiffer

Motivation

- Chess is a complex game with players differing considerably in their expertise levels.

- To help a player increase his/her abilities, this project focuses on the implementation of a mobile assistive system as an on-line tutor.

- The system provides automatic context-specific and attention-related analysis of the chessboard constellation.

- This information is then used to give individualised and adaptive support/feedback in real-time.

- The AUDEYE system shall serve as a test bed for future applications in different research projects.

Application Scenario

In several sports and activities eye tracking has been used successfully to improve the user's skills. The user's gaze data is analysed and afterwards the user is given feedback on detected situations of error. In other applications like museum systems or systems for handicapped mobile assistive technology supports users in real-time. Our system aims to combine both approaches using eye tracking to create a real-time assistant in the domain of chess playing.

In an exemplary scenario the player is wearing an eye-tracking device (ETD) which is connected to our AUDEYE-system (see Figure 1). While the player is looking at the chessboard and thinking about the best chess move the ETD proviedes a scene image of the current chess board with an overlayed fixation point, i.e. which chess piece is currently fixated by the user. The AUDEYE system then automatically extracts a virtual representation of the current chess constellation in real-time using computer vision techniques. This representation of the current chess board configuration is then sent to an open source chess engine and adequate feedback is provided using speech synthesis e.g. a recommendation for the optimal move.

Figure 1: Main components of the AUDEYE-system |

Project's initial state

- Two teams already worked on the project over a total time period of four terms.

- Thus, a lot of code and features had already been implemented making it hard to get an overview of the project and its components.

- The project did not run properly.

- The project was bound to Visual Studio and Windows.

- Old library versions were in use leading to incompatibilities

- The build and run specifications were badly documented

Objectives

The project goals are:- Transferring the project to Linux

- Systematic evaluation of the chess constellations' detection

- Improvement of the detection performance

- Evaluation of the progress using the Test Tool

Description

System description at project's initial state

The user is wearing the eye-tracking device while looking at the chessboard. (Fig. 1) A scene camera in the center of the ETD glasses records images of the player's view while two additional IR-cameras are used to calculate the user's fixation point.

When the AUDEYE-system receives the data from the ETD, it first detects the chessboard in the video of the scene camera. In order to do so, different filters are applied to detect hough lines to precisely locate the chessboard in the image and to create a rectified image of the board. Then edge detection and comparison of contours are used to determine the chess pieces.

The project also contains a chess engine and a speech engine in order to calculate the best move and to provide adequate user feedback.

Description of goals and progress

Our first goal was to improve the detection of the chessboard and the chess pieces using methods like optical flow and expectations based on previous detections. A further goal was to implement the detection of real 3D chess pieces which so far only worked for 2D-chips.

In order to verify our progress in the improvement we also created a Test Tool that uses recorded eye-tracking sessions to create statistics on the performance of the detection. This is done by running the detection part of the AUDEYE system on the video sequences and comparing the output to a manual annotation. The statistics created by the Test Tool contain information on detection rate, critical pieces etc and can then be used to compare several project states.

However after facing huge problems while familiarizing ourselves with the existing code and setup, the porting of the original code from Windows to Linux in order to make the project as platform independent as possible became also a major task.

Changes done by our team

The current AUDEYE system does not support live input from the ETD because the corresponding software is bound to Windows. Thus we extended an existing video player with the functionality to play eye-tracking videos and to send the data to the AUDEYE system via RSB for further processing.

The video player was also necessary for the Test Tool that we created first. The data output of the Test Tool provides several statistics on how well and how fast the detection works. Therefore, we selected videos for different chess situations and conditions. The Test Tool automatically applies the current version of the AUDEYE system to the selected videos to detect the chess situation. Then it compares the results of the system to manual annotations in order to evaluate the detection functionality of the AUDEYE system and create statistics on the performance. (see Fig. 2)

Figure 2: TestTool dependencies |

When we started with our work on the AUDEYE project poker chips with paper printings of bad quality were used as chess pieces. We decided to replace them with 3D printed chips with less noise to improve the detection. Apparently the original algorithms were optimized for the old pieces, so that the detection rate decreased for the new 3D chips.

Figure 3: Old chip |

Figure 4: New 3D printed chip |

We replaced the chess and speech engine used by previous groups with the chess engine Stockfish and a speech engine based on the google API. Both engines have been modified by our group to work with RSB. These engines are platform independent.

Results

The project has been transferred to Linux. It uses CMake for build processes and Jenkins for continuous integration. Also it should now be platform independent which has not been tested yet. The TestTool is ready to work. Because of the delays caused by code familiarization and transfer we were not yet able to improve the detection.

Compared to our original goals, we:- finished the code transfer to Linux

- developed a Test Tool

- printed new 3D chips for the use as chess pieces

- detection of 3D pieces

- improvement of the chess piece detection

The video shows a short presentation of the working chess detection.

Discussion and Conclusion

Conclusion

- We did the transfer the original Windows code to Linux.

- The board detection is stable and works fine.

- The figure detection has some problems in determining the correct shape. Especially for the new figures. Nonetheless, it provides correct information about whether a field is occupied or not.

- The Test Tool was created and can now be used to evaluate the figure detection.

Lessons learned

During the term we discovered that it is important to reserve a lot of time for getting to know an existing project. We underestimated this issue and expected a better documentation. It is for this reason that some of our original goals could not be achieved. Also the project's state forced us to move it to Linux and restructure it due to old libraries and incompatibilities. In general we learned to be prepared for unexpected events.

Outlook

- In the following term we plan to take the project to a well structured level and to test it's cross-platform functionality.

- After that we will decide whether we are going to do a user study or to improve the AUDEYE system by adding techniques for partial chessboard detection. Most probably we will do a user research though. The concrete specification and outcome of this research will be discussed during the next term.

- The project could also be expanded by applications for augmented reality systems like highlighting dangerous pieces to the player.

- An automated expertise rating system of players can be created and implemented.

Summer term

Our work in the second term was focused on the improvement of the chess situation detection as well as on restructuring the project and two user studies. A usability study was conducted to evaluate problems and benefits of the applied feedback methods. The other study was conducted using a different setup, aimed towards using gaze data to classify the skills of player into different categories as expert, good or novice player..

Objectives

- Improve the detection rate

- Extend the system to be an interactive chess advisor

- Conduct a usability study of the system and compare two feedback variants.

- Conduct a study to rate and classify users chess skills using gaze data analysis.

Execution

Preparing the usability study

- In order to conduct a user study, we had to create an acoustic feedback for the user. We realized the feedback in two different ways.

- The first variant uses the Google speech engine

- The feedback is triggered by the user looking at one of the two kings on the board.

- The user will be told the best move that he can do by a generated voice.

- The second variant uses a Sonification

- The user triggers the feedback by looking at a piece or at a destination for a piece.

- The feedback consists of two sounds. The first one will always be the same while the second sound is higher for a better move.

- The user can find the best move by looking at all the pieces and their possible destination and by figuring out which combination has the highest tone

- In the study the acoustic feedback is supposed to be tested for its usefulness in coaching applications.

- Additionally we created a Fallback GUI that enables us to do the figure detection manually.

- This allows us to conduct the study even if the detection fails to work perfectly.

Figure 5: Fallback GUI |

The usability study

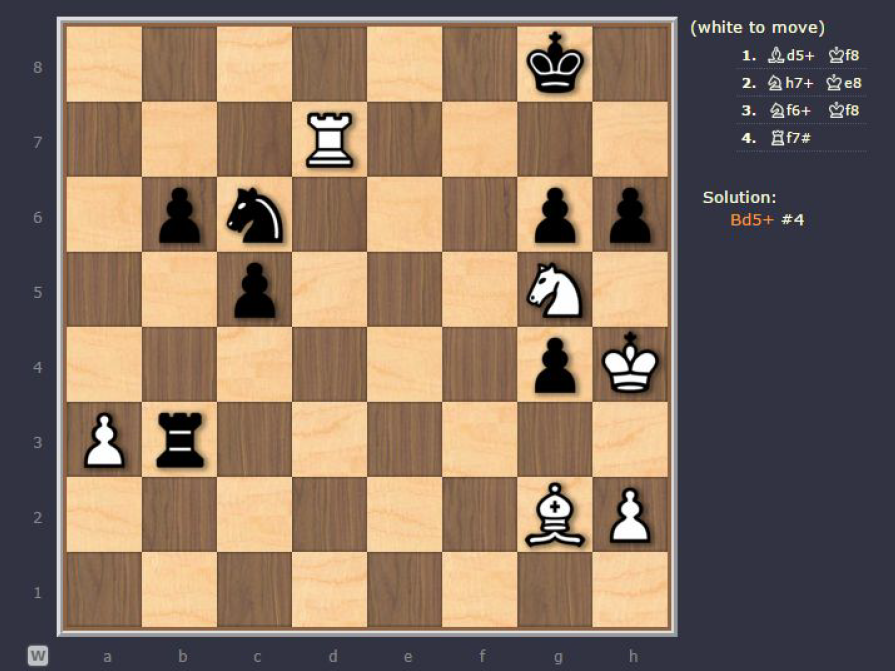

Fifteen volunteers (age M = 26.14, SD = 2.85 years, 3 females) participated in our study. The participants got individual appointments of about 40 minutes. First they had to fill out a questionnaire acquiring demographic and expertise information. (Fig. 6) Afterwards the eye-tracking device was calibrated on them and they were asked to do two chess puzzles (Fig. 7, 8) using the AUDEYE system for assistance.

The participants were assigned to one of two groups by how well the eye-tracking device could be calibrated on them. The group (sonification group, n = 7, 0 females) with a well-calibrated eye-tracking device was given feedback by Sonification (see Preparing the usability study). The second group (speech group, n = 8, 3 females) was given feedback using the speech engine (see Preparing the usability study). After this trial each participant had to fill out another questionnaire rating the system and to provide feedback about problems and opportunities of the system. (Fig. 9, 10)

Figure 6: Questionnaire before trial |

Figure 7: One of the chess puzzles used in the trial |

Figure 8: Another puzzle used in the trial |

Figure 9: First part of the questionnaire after the trial |

Figure 10: Last part of the questionnaire after the trial |

The study was conducted during 5 sessions taking a total of about 18 hours including preparation, calibrations and some malfunctions. The evaluation of the usability part did not yield any relevant results. Therefore we got some interesting feedback on the last questions and in the discussion with the participants. (see Results)

For the study the Fallback GUI was used to manually replace the figure detection |

Classifying Player's Expertise Level in Chess by Analysing Gaze Data

Objective

To analyse the gaze data of participants in a “Find the best move task” and use their fixation on different region of the chessboard to classify their competency in playing chess.

Motivation

Studies have shown that the Experts can rapidly distinguish between the relevant / irrelevant chessboard regions to find a best move in a given chess situation faster than the novice.

Setup

Chess problems(designed with the help of a chess expert) which have relevant / irrelevant region to the game situation separated from each other were shown to the participants. Using an eye-tracking device their gaze data was recorded and the time taken by them to fixate on the relevant and irrelevant region while solving the problem was measured and compared with the performance of an expert while solving the same problem.

Figure 11: Sample Problem (The relevant regions are highlighted in green and irrelevant regions are highlighted in blue.) |

Twelve volunteers (11 Male, 1 Female) participated in our study. The participants got individual appointments of about 45 minutes. Participants were asked to fill out a questionnaire giving demographic details and were asked to rate their chess skills. Afterwards the eye-tracking device was calibrated for them and they were asked to solve chess puzzles with task to find the best move for white. Data from 2 participants could not be used for analysis because the application crashed in the middle of experiment in one case and in another there was a problem with calibrating eye-tracking device because the participant wore glasses.

We used 12 experimental problems. The relevant and irrelevant regions in each problem were always separated. Each participant was presented with problems (on a screen for better calibration of the eye-tracking glasses) and was given 3 minutes per problem to choose the best possible move for white as quickly as possible. The participants told their response to the tester orally. An Expert's response had been recorded to be used as benchmark for comparing skill set of participants.

Figure 12: Study Setup |

Results

General

- Detection rate is very accurate except for the Queen piece because of fine adjustments in parameters that we did during the study

- The system can now be used as a chess advisor and is ready for studies

The video shows a short presentation of the current system version.

Usability study

- The usability study suggests that both feedback variants are not helpful for learning purposes

- due to the lack of explanations about the chess situation

- this is especially true for the voice variant which only tells the user the best move

- There is a need for a more flexible sonification feedback because participants found it either to be too slow or to occur too frequently

Classification Study

- Out of 10 successful participants only 1 was classified as Good and rest as Novice

- People generally overrate their skills.7 out of 12 participants have rated themselves as good an 1 as an expert player.

- Detailed report of the study can be seen here.

Outlook

- An open task remains the detection of real 3D chess pieces.

- One participant suggested to use visual feedback in augmented reality. This could be a future task.

- The current state of the system gives the possibility to conduct studies on several aspects, such as different feedback techniques or on expertise level, using the detection of the board combined with the gaze tracking

- The system can be made more adaptive for example by adding an expertise based component