Intelligent Systems Lab Project: General Purpose Tabletop Robots

Participants

- Marvin Barther

- Alexander Bauer

- Nils Neumann

- Florian Patzelt

Supervisors

- Stefan Herbrechtsmeier

- Timo Korthals

- Thomas Schoepping

Motivation

- Working with multiple mini robots.

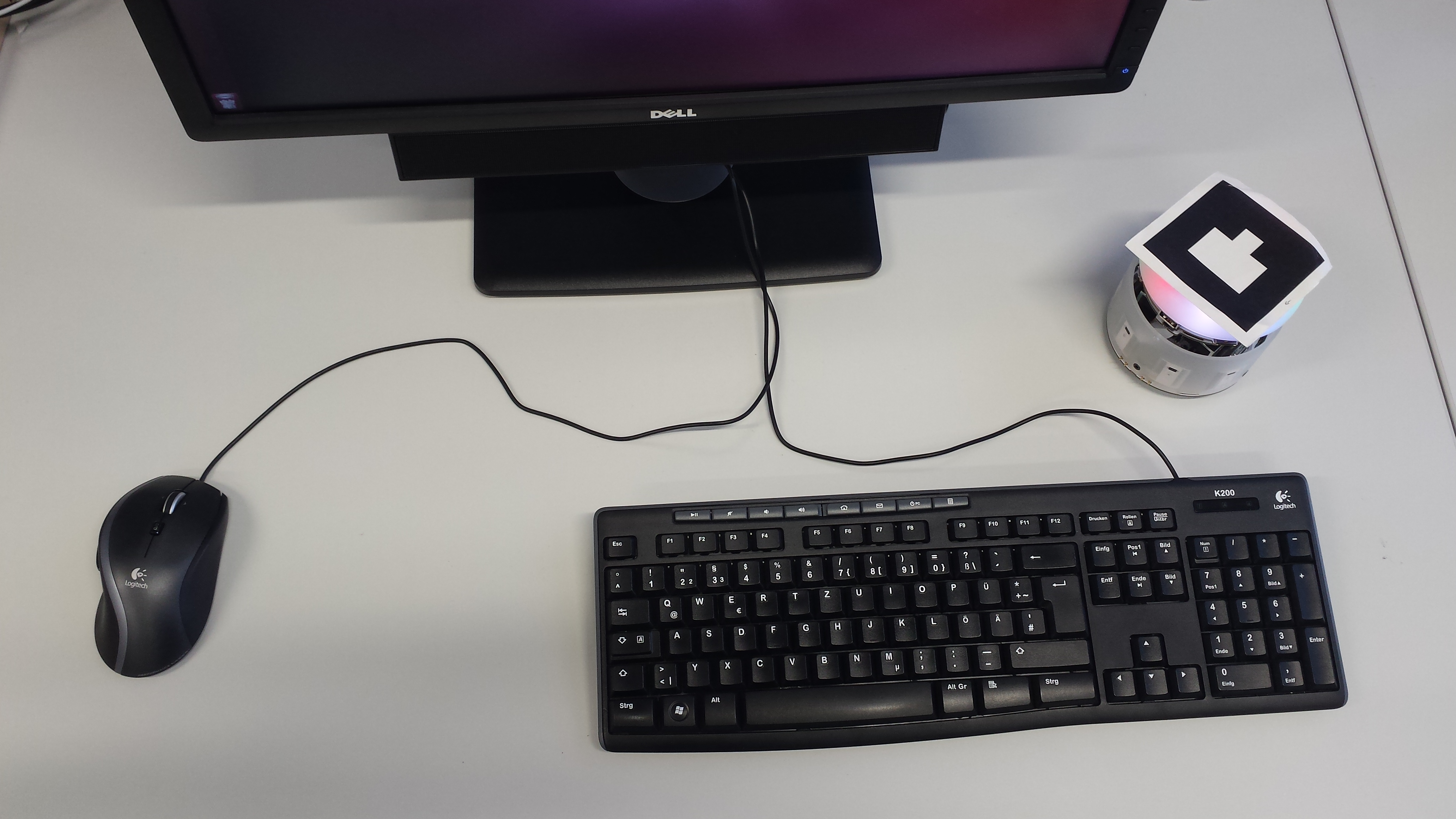

- Integrating mini robots in a modern workspace, with several employees sitting at an office desk.

- The robots support the employees to organise the working desk.

- Employees spend much time on automatable tasks at the workspace, mini robots can undertake these tasks.

Application Scenario

A typical day at the office: Several employees are working at an office table. Employee A is writing a letter. He notices an error, screws up the sheet of paper and throws it on the table. A mini robot on the table detects the rubbish and pushes it towards the rubbish bin at the other side of the table.After employee A has finished his letter he needs a stapler, but employee B on the other side of the table has just used the stapler. Employee B calls a mini robot, places the stapler on top of it and orders the robot to transport the stapler to employee A. Thereupon the mini robot carries the stapler to Employee A. Employee A takes the stapler and continues his work.

Objectives of the First Term

The project goals were:- Integration of the AMiRos [1] in a modern office environment.

- Setting up a reliable tracking system to localize the robots on the office desk.

- Establishing a cooperative multi robot setup with RSB [3] communication between the robots.

- Developing a cooperative exploration strategy, that generates a map of the working desk.

- Implementing an edge avoidance to protect the robots from falling down.

- Realizing the transport of small objects with the AMiRos.

- Programming the AMiRos to autonomously clean up the table.

Description

The project is divided into several parts:- Localization

- Exploration

- Object Transport

- Clean Up

Localization

To localize the AMiRos on the working desk a visual tracking system is used. For this purpose an unique marker is placed on top of each robot. These markers are tracked by a ceiling-mounted camera. The marker positions in the camera image are calculated by ARToolKit [4]. These positions are transformed into a global coordinate system and sent to the robots via RSB.Exploration

The Exploration can be divided into two subparts: Map Building and Frontier-Based Exploration. Map Building is the process of generating a representation of the environment from sensor measurements and Frontier-Based Exploration is the navigation method to explore unknown regions in the environment.Map Building

The AMiRo uses a 2D occupancy grid map as a representation of the environment. Each grid cell contains a value representing the probability that it is occupied by an obstacle. The values in the grid are updated based on the measurements of the 8 proximity sensors mounted on the robot. For this purpose an inverse sensor model is used that defines a probability distribution, where obstacles that cause the sensor measurement might be located relative to the robot. Combining this with the robot's position received from tracking allows to increase the probability in grid cells that are most likely occupied and to decrease it in free cells.For edges detected by the ground sensors of the robot a separate map is used. Whenever the sensors detect an edge all cells in a line parallel to the robot are updated.

Frontier-Based Exploration

The navigation strategy used during exploration is based on the Frontier-Based Exploration proposed by Brian Yamauchi [2]. The main idea of this navigation strategy is that the most information about the world is received by moving to the boundary (frontier) between open space and uncharted territory. To find and plan a path to this frontier all cells in the occupancy grid map have to be classified as either open, unknown or occupied. This is done by a simple thresholding procedure. In [2] a process analogous to edge detection and region extraction in computer vision is used to find the closest frontier. Then the path to this frontier is planned with a depth first search on the occupancy grid map. In our approach these two steps are combined by using Dijkstra's algorithm on the occupancy grid map. To prevent collisions, obstacles in the map are dilated by the robot's radius.

We further expanded the Frontier-Based Exploration by a simple line-following algorithm to achieve an efficient exploration of the edges of the table.

Object Transport

In this task the AMiRo transports objects from its current position to a given position (e.g. to another workspace). The object is placed on top of the robot.Challenge

The transport itself is quite easy, because the objects can be placed stable on top of the AMiRo and it just has to drive to the final position. The challenge in this task is that, if an object is placed on top of the AMiRo, the marker will be covered and the tracking system cannot locate the transporting AMiRo.Solution

If the AMiRo receives the command to transport an object, it will call for help. Another AMiRo comes close to the transporting robot and guides it to the final position. For following the guide the transporting robot uses its proximity sensors. The task scenario can be described in four steps:- Initialization: The AMiRo gets the destination by an additional marker position from the tracking system. Now it is waiting to receive the object.

- Preparation: The transporting AMiRo calculates the path to the final position and chooses a very close position in front of itself. The guide drives to this position and the transporting AMiRo turns towards the guide.

- Following: The guide drives to the final position. It is always in front of the transporting AMiRo, so the transporter can use its two frontal proximity sensors to calculate its distance and direction to the guide. To differentiate between the guide and other objects the transporting robot uses its proximity sensors at the side.

- Finalization: When the guide reaches the final position, it will inform the transporter that the task has been finished. While the guide can now continue with other tasks, the transporting AMiRo is waiting for the user to take the object.

Clean Up

In this task the robot is intended to clean up the table by finding objects which are standing around freely and by pushing them to a kind of dumping ground, i.e. an area marked via an additional tracking marker. The task scenario can be described in three steps:- Finding and selecting an object to push: All objects which are standing around freely need to be distinguished from table borders (i.e. edges or fixed walls) in the occupancy grid map. This is done by a common image processing method which finds disconnected blobs in the space within the borders surrounding the table. The object of all previously found objects which is nearest to the robot is chosen to be pushed to the dumping ground.

- Computing the object's path: The object will be pushed along an optimal path, which is computed by the Dijkstra algorithm on the occupancy grid map.

- Pushing the object: The calculated path is divided into straight segments. After the robot has finished one segment it relocates in order to execute the next path segment. The robot stops when the dumping ground is reached.

Results of the First Term

Exploration

As can be seen in the videos the implemented navigation strategy enables an effective exploration and yields a good representation of the environment. Although the size of the obstacles in the map may differ from their real size, the map is sufficient for the tasks the robots have to perform in this project.This video shows the exploration of a table by two AMiRos. On the right side the image of the camera used for tracking is shown. On the left side the maps generated by the robots are visualized. The probabilites are represented by brightness, white areas represent free space and dark areas represent obstacles. The obstacles in the right map are expanded by the robot's radius. The terminal shows the robot's poses as received from tracking.

Object Transport

Basically this task has been solved: The AMiRos are talking to each other and can execute the initialization, the preparation and the finalization of the task. But while following the guide the behavior of the transporter is unstable. The problem is, that heading objects are not detected by the proximity sensors at the AMiRo's side and therefore it is hard to differentiate them from the guide. Without any objects close to the transporter the following algorithm is working fine.The video shows a simple scenario of the transport task. The final position is on the right side while the transporter (first in yellow) and the guide (first in white) are on the left side. If now an object is placed on top of the transporter, it will call for help (blinking red). The guide (now blinking yellow) drives close to the transporter and guides it to the final position. In the beginning of the following part, the robots drive close to a wall on their left side. In this case it can be seen, that the transporter corrects its driving direction after differentiating between wall and guide.

Clean Up

In the Clean Up Task the robot performs as intended.The object pushing path is segmented into straight segments. Before heading to the start point for the next pushing segment the robot drives a little bit backwards (indicated by blue LEDs) to avoid collision with the object. After putting itself in place behind the object the robot pushes the object along the next straight segment (red LEDs). The dumping ground is defined by a marker on the table.

Discussion and Conclusion

The main goals for the first term have been met. We successfully integrated the AMiRos in a modern office environment with a reliable tracking system. They communicate via RSB and are capable of performing tasks cooperatively. An effective exploration strategy was implemented, that generates a sufficient map of the desk. Based on this map the AMiRos can transport objects and clean up the table.Although we achieved all these objectives, the current state of the project has some limitations. For instance the Map Building is limited by the used VCNL4020 sensors. Because these sensors measure light intensity, we can only build maps of environments consisting of white obstacles. Furthermore the robots do not select tasks autonomously. All tasks have to be started manually.

Objectives of the Second Term

The project goals were:- Improvement of the reliability of all tasks of the first term.

- Implementation of object detection for differentiated object delivery.

- Improving the autonomy by creating a main routine which detects and selects tasks on its own.

- Presenting the table top application on the RoboCup German Open 2015 in the @Home competition.

- Extension of the basic scenario by a mobile sensor platform scenario on the RoboCup German Open 2015 in the @Home competition.

Object Detection

The object detection is based on the SURF algorithm [4] by the openCV library [5]. The knowledge database of the objects is represented by pictures of eight different sides of each object. By using the features calculated by the SURF algorithm the picture of the actual view can be compared with the knowledge database. The object detection is able to do both, detecting if there is a known object and identifying it.Main Routine

In the first term the tasks of the table top scenario (presented in the results of the first term) had been realized in single programs. Only one task managing program could be started at once, because they start their behaviors immediately. Additionally for switching the task, these programs had to be stopped and started manually.In the second term with the focus on the AMiRo's autonomy a main routine has been designed, which starts and stops the possible tasks. All existing task managing programs had to be redesigned, because they must not start their behavior immediately anymore. After starting, they must only initialize themselves and wait for commands from the main routine via RSB [3]. Finally the programs of all tasks can be started. The main routine now organizes the tasks and sends start and stop commands to the specific task programs.

The main routine is designed as a finite-state machine (FSM) which can communicate with the other programs and can receive orders via RSB. After starting it follows a basic procedure:

- Exploration of the area and detection of possible objects in the map (by the Frontier Exploration of the first term)

- Driving to every object and identifying it (by the object detection which has been decribed before)

Cooking Scenario on the RoboCup@Home German Open 2015

In cooperation with the Team of Bielefeld (ToBI) the table top scenario should be integrated in a cooking scenario which should be presented on the RoboCup. The planned scenario can be described as follows:While the service robot ToBI supports a cooking team member by checking the cooking recipe, the AMiRo, which is placed on a different table, does its table top scenario by exploring the area and detecting and identifying the objects. Just after ToBI has recognized that there is an ingredient missing in the kitchen, it asks the AMiRo if it has already found it. If so, ToBI will drive to the AMiRo's table and the AMiRo pushes the requested object to a specified position where ToBI can grasp it easily. The communication between the AMiRos and ToBI is based on a wifi connection with RSB [3].

The localization of the first term, which is for example necassary for the exploration, is based on the tracking system, which calculates the AMiRo's position by using a camera above the table. For the cooking scenario on the RoboCup the cameras could not be used. Because the navigation could not be extended to an other navigation method, the table top behavior of the AMiRo had to be simplified. Functionalities like the exploration could not be used.

Rescue Scenario on the RoboCup@Home German Open 2015

In the final of the competition the basic application scenario has been extended. The AMiRo's task is to support ToBI in a rescue scenario as a waypoint. The scenario can be described as follows:

There is a power blackout in the apartment and the lights are turned off. It is ToBI's task to search for people in the apartment and to guide them the way out. While driving through the apartment, two AMiRos extended by a laser scanner on top of them are following it. At strategic positions each AMiRo gets a command to stay, so that they build a chain of luminous waypoints on the ground of the apartment. After finding people, ToBI sends them outside of the apartment by ordering them to follow the waypoint chain. By using the laser scanner the AMiRos recognize the fleeing people and inform ToBI which counts the rescued people. Finally ToBI returns and collects all AMiRos.

In this scenario the AMiRo is not autonomous anymore, because it only listens to ToBI and executes its commands. The AMiRo has the two basic tasks serving as a waypoint and following ToBI with a laser scanner. The task selection is controlled by a main routine, which starts and stops the tasks as commanded by ToBI. The communication between the AMiRos and ToBI is based on a wifi connection with RSB [3].

Waypoint

When commanded to set up a waypoint the AMiRo takes a reference laser scan. By comparing the following measurements to the initial scan, it can be detected if something has entered or left the scanned area. The information about those two events are published via RSB, which can be received by other RSB supporting machines (for example ToBI).

Following ToBI

In contrast to the designed following behavior by the proximity sensors of the object transport task, by using the laser scanner the distance to the next object for each angular direction can be measured directly. In this case the following behavior is based on choosing the distance and angular orientation by the smallest measured distance. Its angular change is restricted by a low pass filter to stay focused on the guide's position, even if the guide is driving next to very close objects.

Main Routine of the Mobile Sensor Platform

The main routine for this task is a finite-state machine (FSM). It is listening to ToBI and is waiting for orders to start or stop the waypoint or the following behavior.

RoboZoo on the RoboCup@Home German Open 2015

For a presentation of all the robots participating in the RoboCup@Home competition at once, the competition task "RoboZoo" has been created. Every participating robot gets a presentation area of 2x2 meters. The areas are placed in a line like in a zoo with animal cages at the sides. The visitors of the competition can watch the robots and interact with them. The robots should be as autonomous as possible. Finally the visitors vote for their favorite robot.

In cooperation with ToBI a dancing act with the AMiRos had been designed. ToBI stood behind a table, where four AMiRos were placed on. The visitors could use signs with a song name on one side and a QR code on the other for choosing a song, which ToBI and the AMiRos should dance. The QR code was read by ToBI, which informed the AMiRos about the chosen song via wifi with RSB [3]. The robots danced a defined choreography with respect to the chosen song.

Results of the Second Term

Final Table Top Scenario

The basic scenario could be designed as a single table top scenario. The video is seperated into the exploration and the object detection with object delivery.

In the video four different views are displayed: The main view shows the scenario from the side, basically the employee's view. On the buttom left side the same scenario is shown from the detecting camera. The other two parts show the generated map with colored markers indicating the robot positions and colored lines, which represent the planned path, which the AMiRos shall drive. The map in the center is the original map with the probabilities for free space (white), obstacles (black) und unknown space (gray). The right map shows the generated obstacle map, where the obstacles are expanded by the AMiRo's radius.

In the first part of the video two AMiRos are exploring the table by using their proximity sensors (blue lights) at the side. Additionally they can detect table edges (red lights), which are marked with black color, by using proximity sensors at the bottom. They publish their measurements around them, so they can generate a map together. Additionally an implemented repel effect between the AMiRos is used to prevent collisions and to improve the exploration procedure.

In the second part the AMiRo conducts an object localization on the map. Then it drives to each object and identifies it (yellow colors). The taken picture for the object identification is shown on the opposite side of the object. Afterwards the AMiRo gets the command via RSB [3] to deliver the cup of coffee to a position (blue lights), which is specified by a tracking marker. A pushing path is calculated, which can be seen in the maps.

Rescue Scenario on the RoboCup

In the following video the rescue scenario of the RoboCup German Open 2015 of ToBI is shown.

You can see the scenario as described before (here the lights are turned on due to visibility reasons) simplified to the basic actions of the AMiRos. ToBI first searches the people in the apartment and the AMiRos are following (yellow lights). At two strategic points ToBI asks the AMiRos to stay (blue lights). When ToBI finds the people, it initializes the AMiRos as waypoints (pink lights) and asks the people to follow the waypoint chain to the exit. If a human enters the range of an AMiRo (green lights), ToBI will be informed. Finally ToBI can count the rescued people. Afterwards ToBI uninitializes the AMiRos (blue lights) and collects them again (yellow lights).

Robo Zoo on the RoboCup

In the two following videos two of the robot's choreographies are shown. The robots are dancing to the songs "Let's twist again" by Elvis Presley and "In the Summertime" by Mungo Jerry.

The AMiRos are placed on a table in front of ToBI. Signs with the song name on one side and the QR code on the other are stored at the sides of the table. The visitors can take a sign and show it to ToBI. After informing the AMiRos, the robots start dancing their choreography.

Discussion and Conclusion

The main goals of the second term, including object identification and designing a behavior coordinating main routine, have been met. Additionally the tasks of the first term are more reliable. Finally the tasks could be merged to the final table top scenario. By giving RSB commands the AMiRo can deliver explored and known objects and transport other objects, which are placed on top of it.

But the commands have to be given directly by RSB. There is no device yet, which functions as an useful interface for commands and maybe for status requests between humans and the AMiRos. Furthermore the navigation could not be extended to other navigation methods, so the position is still given by the tracking system with the camera. This was the main reason why the table top scenario could not be shown on the RoboCup@Home competition as planned.

Additionally a stable mobile sensor platform application has been designed for the AMiRo, which was shown in the rescue scenario in the RoboCup@Home competition. In this case the AMiRo is not autonomous. It just waits for commands from outside and acts as an additional sensor platform for other machines. At the RoboCup ToBI could use the AMiRos as waypoints to check human activity in the AMiRo's laser scanner range. Additionally the AMiRos could follow ToBI to be placed at strategic positions for the rescue scenario.

References

[1] S. Herbrechtsmeier, U. Rückert and J. Sitte: AMiRo - Autonomous Mini Robot for Research and Education, Advances in Autonomous Mini Robots. Springer Berlin Heidelberg, 2012, S. 101-112.

[2] Brian Yamauchi: Frontier-based exploration using multiple robots, Proceedings of the second international conference on Autonomous agents, p. 47-53, May 10-13, 1998, Minneapolis, Minnesota, USA

[3] Robotics Service Bus (RSB)

[5] H. Bay, T. Tuytelaars and L. Van Gool: SURF: Speeded Up Robust Features, ETH Zürich, Universität Leuven

[6] OpenCV library of the feature detection.