Intelligent Systems Lab Project: PUPIL

Participants

- Adrian Gehrke

- Alexander Meerhoff

- Günes Mineraci

- Niksa Rasic

- Kevin Gardeja

Supervisors

- Andrea Finke

- Eckard Riedenklau

Motivation

- Many people do not know how many cooking recipes can be completed with just a few ingredients.

- Thus unimaginative people may think that they do not have enough ingredients for even a single meal.

- Therefore our application is supposed to show all recipes from an online database, which are completable with the available ingredients, in an innovative way and guide through the preparation process.

- Gaze based interaction keeps the user's hands free and let him cook while intuitively operating within the application.

- The intelligent apartment of the CITEC offers a great platform to implement this concept.

Application Szenario

In our application the user is wearing eye-tracking glasses, which should supply information about considered ingredients.The user will initially identify ingredients using the eye-tracking glasses.

Those ingredients will be added to a list of accessible ingredients. This list is always shown on the application's web interface.

The application furthermore constantly calculates if there are any completable cocktail recipes available, that only need ingredients from the accessible ingredients list.

If that is the case, the number of realizable recipes is shown on the web interface and the user can navigate through them using the eye-tracker.

The identification of ingredients, as well as all other interactions that can be achieved with the eye-tracker in our application, are based on the user's visual focus.

Currently all detectable ingredients and actions are mapped to certain markers the user has to fixate on to interact with the system. This is done via a gaze-contingent object detection using BCH-markers.

Objectives

The goals for this project are- Development of a step by step cocktail-mixing assistant in a "wizard" like way.

- Usage of eye-tracking to detect and collect accessible ingredients and also enable user interaction.

- Connection of the application to online databases to have access to as many recipes as possible.

- Integration into the intelligent kitchen environment.

Description

After starting the project's Python-CGI-Server the corresponding website will show a list of predefined recipes and an empty list of currently accessible ingredients.The user can then add new ingredients or activate and navigate through the recipe guide using the eye-tracking device.

The PUPIL software maps the user's gaze, received from the eye facing camera, onto the image seen by the outward facing camera.

Our python module, which is a plugin for the PUPIL software, will then compress the image using OpenCV.

The compressed image is then combined with the gaze coordinate values and made available to the other modules via the RSB middleware.

The ICL module will receive these images and decompress them to perform (BCH) feducial marker detection.

The program calculates for each received frame (image), using the gaze coordinates and each marker's bounding box (edge), if the user is fixating on a particular marker.

Should the ICL program detect a fixation, the ID or name of the marker is made available through RSB.

The Python client module will then receive the marker ID and search for an ingredient or interaction input corresponding to the fixated marker.

Afterwards the client propagates necessary changes to the CGI-Server accordingly.

Therefore the client will use the Splinter library, which will perform the changes on the shown web interface

Markers can be mapped to any interaction with the web-interface, or to any ingredient desired to be added to the accessible ingredient list.

The CGI-Server furthermore observes the completability of recipes. When new ingredients are added the server will update the web interface with new information about how many and which recipes are completable.

Results

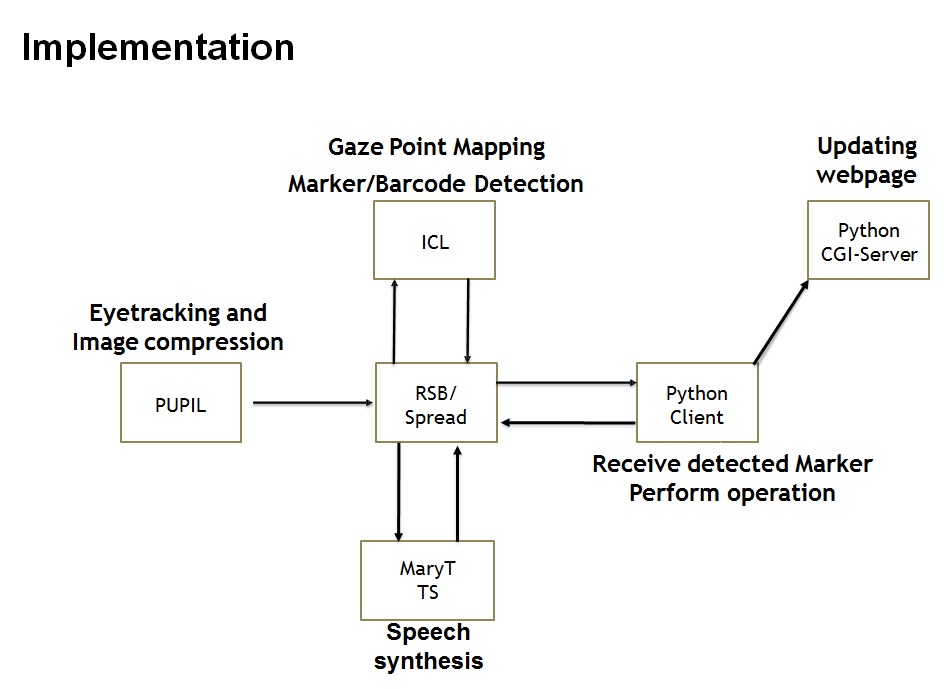

The functionality aforementioned was successfully implemented, resulting in an architecture of five simultaneously running program parts that interact through RSB.Communication via RSB is running reliably and generally fast enough. Although proper evaluation with users still has to be made.

Hence we achieved the core of our goals. In our scenario it is possible to add ingredients to the list and perform multiple different interactions via gaze-based interaction.

The video shows an user using the eye-tracker to first add ingredients to the application and then navigate through the processing steps.

Discussion and Conclusion

We only had some minor issues with eye-tracking calibration. If an user had a too dark iris, the eye-tracker could not recognize the users gazes in many cases.If an user moved his head, we also had to recalibrate often. Everything else is running smoothly without major crashes.

The arrangement we met in the beginning while planning the project worked out quite well. Hence we did not have any time issues or further problems in achieving our projects goals.

Furthermore the division into little expert groups also worked out quite well. Every single group has done a great job so that it was no big challenge to merge the single project parts.

Results of the second term (summer term 2015).

In the second term we extended our application by some new components and we also improved some features of allready implemented components.Because of the architecture, we choose for our application, it was quite simple to add new components. The communication between the components has not changed at all. Every component is still encapsulated and modular.

One of the components we added, was a speechsyntehesis server. We used this server to handover audio feedback to the user, so that a user is informed about what he is currently doing. For example the speechsyntehesis server would tell a user which ingedrient he just added or which action he is about to trigger.

Another module we added is a online database for recipes. Thanks to this module a user has now access to a great variety of different cocktails. Whenever an ingedrient is added, our application checks, with help of the database module, which known cocktails a user could possibly mix with the ingedrients he has allready added.

We also added a module for scanning bar codes. Thus we can now add ingedrients by their bar code as well.

The major feature we have improved has been our calibration for the eye-tracking glasses. We can now calibrate it using the table plane. Thus we do not have to recalibrate the eye-tracking glasses ervery time the user moves his head slightly, anymore.