|

Faculty of Technology | Sociable Agents Group | Artificial Intelligence Group |

|

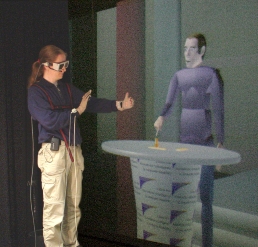

The Articulated Communicator Engine (ACE) is a toolkit for building animated embodied agents

that are able to generate human-like multimodal utterances. It provides easy means of building

an agent by defining a kinematic body model, and of specifying the ouput that the agent is to

generate by describing the desired overt form of an utterance in a XML description language

(MURML, a multimodal utterance representation markup language). The toolkit takes care of the

rest, namely creating natural coverbal gestures, synthetic speech, and facial animations on-the-fly

and coordinating them in a natural fashion. One demonstration application is Max - the

Multimodal Assembly eXpert. Max is situated in a virtual environment for cooperative construction

tasks, where he multimodally demonstrates to the user the construction of complex aggregates

and guides the user through interactive assembly procedures. Max in action:

Max in action:

Max at the open house in our lab! |

|

Real-time gesture synthesis: Max is able to create and execute gesture animations from MURML descriptions of their essential spatiotemporal features, i.e. of their meaningful "stroke" phases (see example below). To this end, an underlying anthropomorphic kinematic skeleton for the agent was defined comprising 103 DOF in 57 joints, all subject to realistic joint limits. This articulated body is driven in real-time by a hierarchical gesture generation model that emphasizes the accurate and relieable reproduction of the prescribed features. It includes two main stages:

|

| MURML specification of a two hand iconic gesture | Generated gesture |

<gesture id="gesture_0">

<constraints>

<symmetrical dominant="right_arm" symmetry="SymMS">

<parallel>

<static slot="PalmOrientation" value="DirL"/>

<static slot="ExtFingerOrientation" value="DirA"/>

<static slot="HandShape" value="BSflat"/>

<static slot="HandLocation"

value="LocUpperChest LocCenterRight LocNorm"/>

</parallel>

</symmetrical>

</constraints>

</gesture>

|

gesture (mpeg) |

|

Facial expression: The agent's face model comprises 21 different muscles that systematically deform the facial geometry. Facial actions like eye blink, animated speech, and emotional expressions employ muscle contractions in a coordinated way. |

|

Speech synthesis: Our Text-to-Speech system builds on and extends the capabilities of txt2pho and MBROLA: It controls prosodic parameters like speechrate and intonation. Furthermore, it facilitates contrastive stress and offers mechanisms to synchronize pitch peaks in the speech output with external events.

|

|

Speech animation: Lip-sync speech animations are created from the phonetic representation provided by the TTS system. To this end, realistic face postures during the articulation of different phonems (visems) have been defined in terms of muscle contractions and are interpolated in a timed fashion. |

| MURML utterance specification | Generated utterance |

<utterance>

<specification>

And now take <time id="t1"/> this bar <time id="t2" chunkborder="true"/>

and make it <time id="t3"/> this big. <time id="t4"/>

</specification>

<behaviorspec id="gesture_1">

<gesture>

<affiliate onset="t1" end="t2" focus="this"/>

<function name="refer_to_loc">

<param name="refloc" value="$Loc-Bar_1/>

</function>

</gesture>

</behaviorspec>

<behaviorspec id="gesture_2">

<gesture>

<affiliate onset="t3" end="t4"/>

<constraints>

<symmetrical dominant="right_arm" symmetry="SymMS">

<parallel>

<static slot="HandShape" value="BSflat (FBround all o) (ThCpart o)"/>

<static slot="ExtFingerOrientation" value="DirA"/>

<static slot="PalmOrientation" value="DirL"/>

<static slot="HandLocation" value="LocLowerChest LocCenterRight LocNorm"/>

</parallel>

</symmetrical>

</constraints>

</gesture>

</behaviorspec>

</utterance>

|

utterance (mpeg) (German speech) |