Dialogue Coordination for Sociable Agents

Hendrik Buschmeier and Stefan KoppSmooth and trouble-free interaction in dialogue is only possible if interlocutors are able to coordinate their actions with each other. Because of this, we think that an understanding of how human coordination devices work is the basis for successful spoken language computer interfaces such as embodied conversational agents or dialogue systems. In this research project we model two important coordination mechanisms that are commonly found in human-human interaction: feedback and adaptation.

Feedback

Feedback is an expressive, economic and rapid coordination device used by listeners to signal contact, perception, understanding and agreement as well as attitude towards an utterance of a speaker. Feedback can be conveyed in the form of short and unobstrusive verbal signals (called ‘backchannels’) or via nonverbal behaviour such as head gestures, eye gaze or facial expression.

A range of research projects already enabled embodied conversational agents to provide verbal as well as non-verbal backchannels to human speakers talking to them. In contrast to this, the aim of this project is to create an attentive speaker agent which learns to recognise, interpret and react appropriately to feedback signals provided by human listeners. Furthermore, this knowledge on feedback understanding will then be used to generate meaningful feedback for human users speaking with the agent.

Adaptation

It can be observed that people in dialogue adapt to each other. One example is that, after interacting for a certain period of time, they tend to use the same vocabulary and syntactic constructions. Several convincing (and most likely not independent) theories from different perspectives offer explanation for this phenomenon, among them the mechanistic interactive alignment account of dialogue which sees priming in the language processing system as the reason for adaptation (then called ‘alignment’) on the linguistic level.

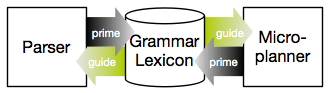

As an example for low-level coordination in dialogue, we proposed a priming-based computational model of linguistic alignment and implemented it in a microplanning component for natural language generation as well as in a parser. In this model, language use (i.e., generation and parsing) is, on the one hand, guided by activation values attached to linguistic resources (grammar, lexicon) and, on the other hand, influences these activation values through priming.

Evaluation of the microplanner on a human-human dialogue corpus shows that the alignment-capable version outperforms a baseline version in which alignment was deactivated.

Publications

All publications can be downloaded from Hendrik Buschmeier's website.

Kopp, S., van Welbergen, H., Yaghoubzadeh, R., & Buschmeier, H. (online first). An architecture for fluid real-time conversational agents: Integrating incremental output generation and input processing. Journal on Multimodal User Interfaces.

Buschmeier, H. & Kopp, S. (2013). Co-constructing grounded symbols—Feedback and incremental adaptation in human–agent dialogue. Künstliche Intelligenz, 27:137–143.

Buschmeier, H. & Kopp, S. (2012). Understanding how well you understood – Context-sensitive interpretation of multimodal user feedback. In Proceedings of the 12th International Conference on Intelligent Virtual Agents, pp. 517–519, Santa Cruz, CA.

Buschmeier, H. & Kopp, S. (2012). Using a Bayesian model of the listener to unveil the dialogue information state. In SemDial 2012: Proceedings of the 16th Workshop on the Semantics and Pragmatics of Dialogue, pp. 12–20, Paris, France.

Buschmeier, H. & Kopp, S. (2012). Adapting language production to listener feedback behaviour. In Proceedings of the Interdisciplinary Workshop on Feedback Behaviors in Dialog, pp. 7–10, Stevenson, WA.

Włodarczak, M., Buschmeier, H., Malisz, Z., Kopp, S., & Wagner, P. (2012). Listener head gestures and verbal feedback expressions in a distraction task. In Proceedings of the Interdisciplinary Workshop on Feedback Behaviors in Dialog, pp. 93–96, Stevenson, WA.

Buschmeier, H., Baumann, T., Dosch, B., Kopp, S., & Schlangen, D. (2012). Combining incremental language generation and incremental speech synthesis for adaptive information presentation. In Proceedings of the 13th Annual Meeting of the Special Interest Group on Discourse and Dialogue, pp. 295–303, Seoul, South Korea.

Striegnitz, K., Buschmeier, H., & Kopp, S. (2012). Referring in installments: A corpus study of spoken object references in an interactive virtual environment. In Proceedings of the 7th International Natural Language Generation Conference, pp. 12–16, Utica, IL.

Malisz, Z., Włodarczak, M., Buschmeier, H., Kopp, S., & Wagner, P. (2012). Prosodic characteristics of feedback expressions in distracted and non-distracted listeners. In Proceedings of The Listening Talker. An Interdisciplinary Workshop on Natural and Synthetic Modification of Speech in Response to Listening Conditions, pp. 36–39, Edinburgh, UK.

Buschmeier, H., & Kopp, S. (2011). Unveiling the information state with a Bayesian model of the listener. In SemDial 2011: Proceedings of the 15th Workshop on the Semantics and Pragmatics of Dialogue, pp. 178–179, Los Angeles, CA, USA.

Buschmeier, H., & Kopp, S. (2011). Towards conversational agents that attend to and adapt to communicative user feedback. In Proceedings of the 11th International Conference on Intelligent Virtual Agents, pp. 169–182, Reykjavik, Iceland.

Buschmeier, H., Malisz, Z., Włodarczak, M., Kopp, S., & Wagner, P. (2011). ‘Are you sure you're paying attention?’ – ‘Uh-huh’. Communicating understanding as a marker of attentiveness. In Proceedings of INTERSPEECH 2011, pp. 2057–2060, Florence, Italy.

Schlangen, D., Baumann, T., Buschmeier, H., Kopp, S., Skantze, G., & Yaghoubzadeh, R. (2010). Middleware for incremental processing in conversational agents. In Proceedings of SIGDIAL 2010: the 11th Annual Meeting of the Special Interest Group in Discourse and Dialogue, pp. 51–54, Tokyo, Japan.

Buschmeier, H., Bergmann, K., & Kopp, S. (2010). Modelling and evaluation of lexical and syntactic alignment with a priming-based microplanner. In Krahmer, E. & Theune, M., editors, Empiricial Methods in Natural Language Generation, pp. 85–104, Springer, Berlin/Heidelberg, Germany.

Buschmeier, H., Bergmann, K., and Kopp, S. (2010). Adaptive expressiveness – Virtual conversational agents that can align to their interaction partner. In Proceedings of the 9th International Conference on Autonomous Agents and Multiagent Systems, pp. 91–98, Toronto, Canada.

Buschmeier, H., Bergmann, K., & Kopp, S. (2009). An alignment-capable microplanner for natural language generation. In Proceedings of the 12th European Workshop on Natural Language Generation, pp. 82-89, Athens, Greece.

Software

IPAACA – Incremental Processing Architecture for Artificial Conversational Agents

Last update:

[Formerly: Sociable Agents Group]

[Formerly: Sociable Agents Group]