MetaGest - Modifying functions in gestures as a meta-communicative resource

Farina Freigang and Stefan KoppWhy meta-communication?

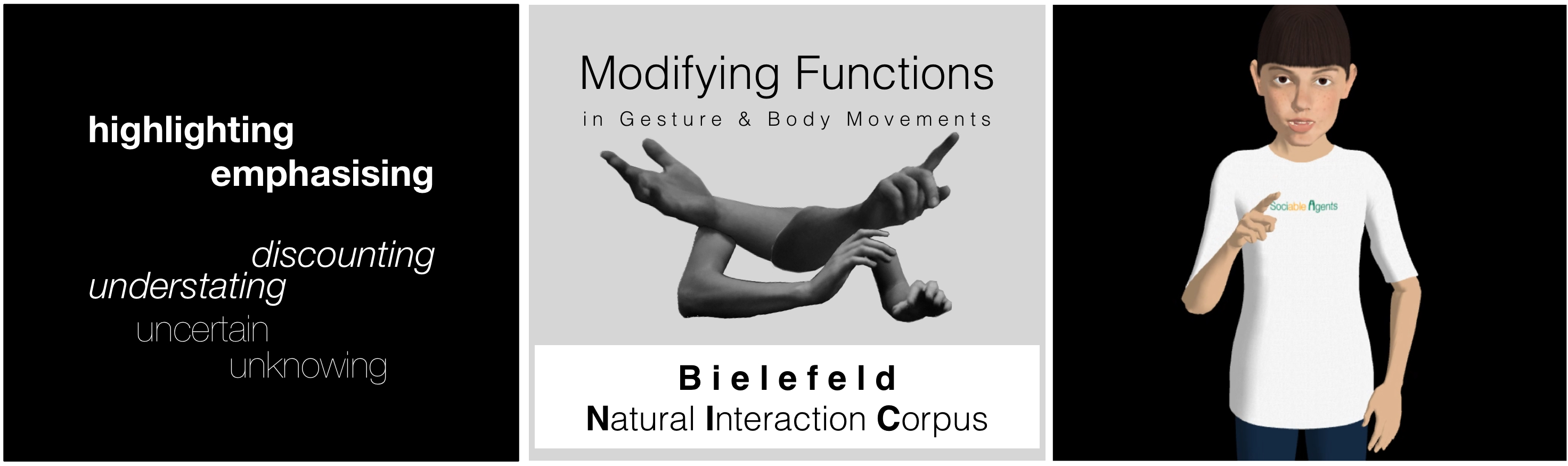

Meta-communication is an integral part of natural conversations among humans. Speakers add (indirect) cues to the intended meaning of their utterances. One particularly interesting phenomenon of meta-communication is (pragmatic) modification, when humans apply non-verbal signals such as gestures and body language in order to classify the semantic content of their utterances for the listener. By doing this, speakers want to communicate their viewpoints, convictions, knowledge, attitudes, among others. Listeners perceive those signals in addition to the semantics and integrate everything into a congruent message. Which signals are perceived as more meaningful and, therefore, are integrated with more weight than others depends on the articulation accuracy of the speaker and the perception sensitivity of the listener.

Modifying functions in gesture

This research is primarily dedicated to the under-researched area of (pragmatic) modifying functions in gestures. We (Freigang & Kopp, 2015 and 2016) define a gesture to carry a modification if the meaning of the whole utterance is changed from the pure semantics or from the proposition of the verbally or gesturally signified content. The modification acts upon and carries meaning beyond mere propositional content and is applied analogue to this content. We identified three major functions of modification: a focusing, an epistemic and an attitudinal function.

Each of these functions can have a positive or negative manifestation, e.g., a positive focusing function highlights an aspect vs. a negative one takes something out of focus. Although the function types are analysed separately, the functions a gesture may carry are not exclusive: an in the air pointing index finger gesture carries both a positive focusing and a positive epistemic (at least signalled as such) function at the same time. The findings are supported by empirical data, namely, the Bielefeld Natural Interaction Corpus (NIC), which is a dataset recorded and composed particularly for this research and consisting of numerous natural communicative gestures and body movements.

Multimodality and incongruence

Taking an integrative approach, we consider various modalities. Although gestural modification is researched predominantly, we observed parallels to other modifications such as modifying keywords (modal particles and sentential adjectives) and prosodic and facial markings in an utterance. Further investigations examine incongruences or rather polarities between modalities and/or functions of uttered content. The simplest but most pronounced case of polarity is the integration of positive and negative content within an utterance. Also, utterances may contain contrasting elements as deployed in irony.

Virtual humans

With this project we contribute foundational research on gesture and nonverbal communication. A further objective is the evaluation of our findings with virtual humans. We postulate that virtual humans enhanced with gestures of modifying functions have richer communicative abilities. One effect of such communicative abilities is that the recall of content is higher, when presented with a positive focusing function (‘this is important’) as compared to a negative epistemic one (‘I don’t know’). The ultimate aim of this research project is to integrate our empirical findings with other background findings in a hybrid computational model that captures pragmatic modifications as they occur in ordinary human interactions.

Publications - talks - posters

Freigang, F., & Kopp, S. (2016). This is what’s important – using speech and gesture to create focus in multimodal utterance. In D. Traum, W. Swartout, P. Khooshabeh, S. Kopp, S. Scherer & A. Leuski (Eds.), Lecture Notes in Computer Science: Vol. 10011. Intelligent Virtual Agents (pp. 96-109). Springer International Publishing.

How gestures tune meaning of multimodal utterances: Analyses of modifying functions. Poster presentation at the 7th Conference of the International Society for Gesture Studies (ISGS 7). Paris, July 18-22, 2016.

Brushing up or aside? - Analyzing the modifying functions of gesture in different multimodal utterance contexts. Oral presentation at the Workshop Mapping Multimodal Dialogue (MaMuD 3), Lille, France, November 19-20, 2015.

Freigang, F., & Kopp, S. (2015). Analysing the Modifying Functions of Gesture in Multimodal Utterances. In G. Ferré & M. Tutton (Eds.) Proceedings of Gesture and Speech Integration (GESPIN 4), pp. 107-112.

Beyond propositional content: Modifying functions in gesture. Oral presentation at the Workshop Mapping Multimodal Dialogue (MaMuD 2), Leuven, Belgium, November 21-22, 2014.

Beyond propositional content: Modifying functions in gesture. Oral presentation at the KogWis PhD-Symposium. Tübingen, Germany, September 28, 2014.

The tone we don't hear - Analysing the modifying functions of gestures. Oral presentation at the 6th Conference of the International Society for Gesture Studies (ISGS 6). San Diago, CA, USA, July 8-11, 2014.

Exploring the speech-gesture semantic continuum. Oral presentation at the 1st European Symposium on Multimodal Communication. Valletta, Malta, October 17-18, 2013.

Last update: